- Main

- News

- Report on the workshop "Large Language Models (LLM). NVIDIA Software and Hardware stack for LLM"

Report on the workshop "Large Language Models (LLM). NVIDIA Software and Hardware stack for LLM"

Moderators:

Diana Rakhimova – PhD, Associate Professor of the Department of Information Systems

Kassymbek Nurislam – Senior Lecturer at the Department of Computer Science

Tulepberdinova Gulnur – Candidate of Physico-Mathematical Sciences, Associate Professor of the Department of Artificial Intelligence and Big Data

Date: February 23, 2024

Venue: Almaty, Al-Farabi Kazakh National University within the framework of the Digital Farabi Forum

Organizers: Al-Farabi Kazakh National University, Faculty of Information Technology

Format of the event: hybrid

Participants: representatives of universities and research institutes, such as:

- Al-Farabi KazNU;

- WKATU named after Zhangir khan;

- Korkyt Ata Kyzylorda University;

- Esil University;

- Astana International University;

- Yessenov University;

- Institute of Mathematics and Mathematical Modeling;

- Institute of Information and Computing Technologies;

- Maqsut Narikbayev University;

- L.N. Gumilyov Eurasian National University;

- The Institute of Linguistics named after A. Baitursynov;

- Turan University;

- Astana IT University;

- Kazakh-British Technical University;

- Kyrgyz State Technical University named after I.Razzakov and so on.

Total number of participants: offline - 42 people, online – 44 people.

Pre-registration of participants: in online format.

Purpose and content

The purpose of the workshop: An introduction to NVIDIA's LLM software and hardware stack and an overview of configuring, learning, and applying large language models.

Report:

- Anton Joraev, Senior Corporate Business Development Manager at NVIDIA Corporation, Oleg Ovcharenko, Architect of NVIDIA solutions in the field of energy, high–performance computing, and AI at NVIDIA Corporation -"Configuring, training, and applying large language models. NVIDIA Software and Hardware stack for LLM".

Summary of the report

Topics covered:

• Intro and challenges

• Data handling (RAPIDS)

• Training (NeMo)

• Customization

• Optimization (TRT-LLM)

• Inference (Triton Server)

• Moderation (NeMo Guardrails)

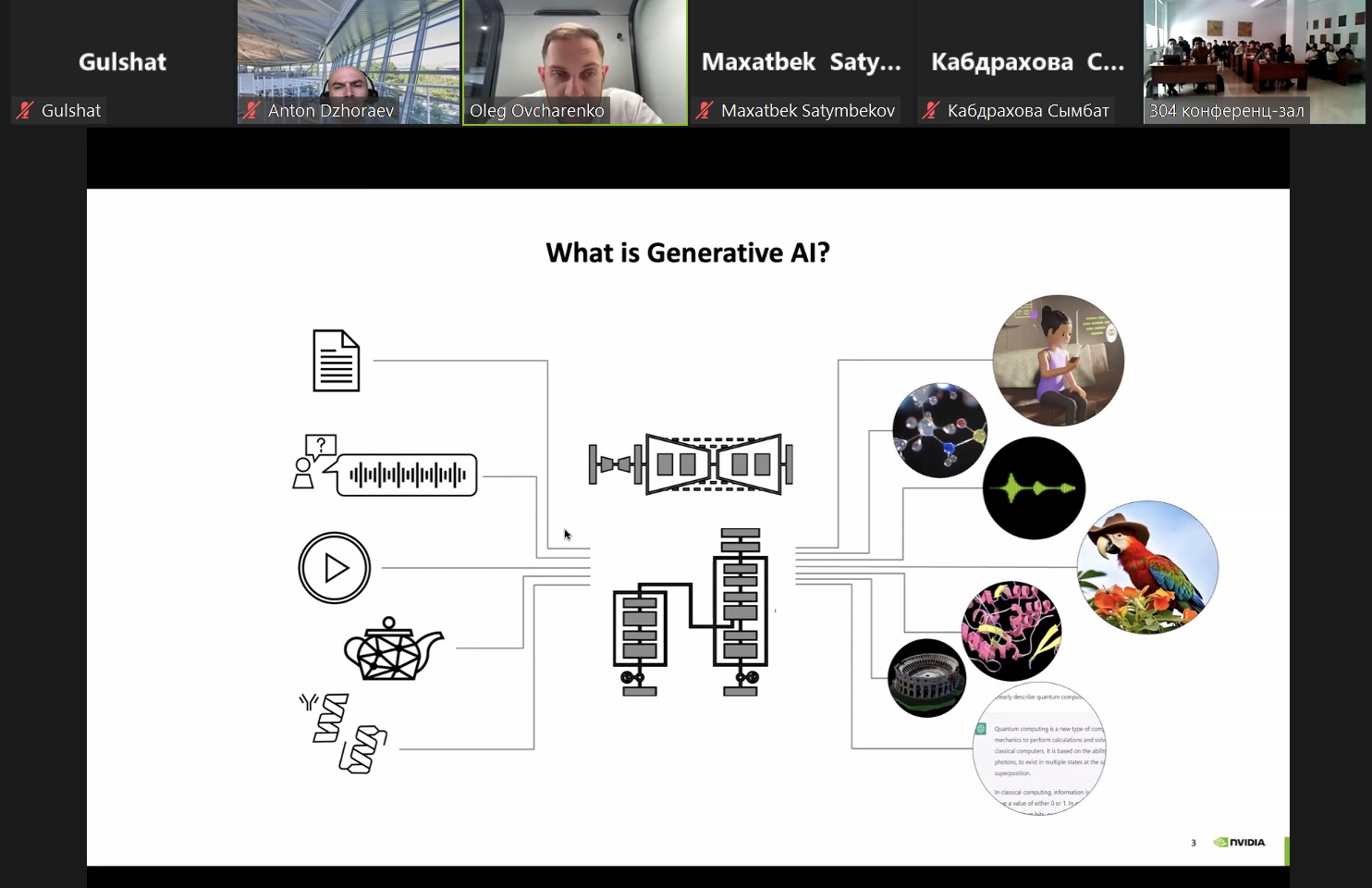

At the workshop "Large Language Models (LLM). NVIDIA Software and Hardware Stack for LLM", conducted by NVIDIA, provided a detailed overview of the current development and future trends in the field of generative AI, focusing on NVIDIA's contribution in aspects of hardware acceleration, software frameworks and AI model development. The introduction was devoted to the importance of generative AI and its potential contribution to a variety of sectors.

At the workshop "Large Language Models (LLM). NVIDIA Software and Hardware Stack for LLM", conducted by NVIDIA, provided a detailed overview of the current development and future trends in the field of generative AI, focusing on NVIDIA's contribution in aspects of hardware acceleration, software frameworks and AI model development. The introduction was devoted to the importance of generative AI and its potential contribution to a variety of sectors.

The architecture and capabilities of the NVIDIA platform were further highlighted, emphasizing their support for AI workflows, including large language models and other generative AI tasks..

Important sections of the presentation revealed the rapid development in the field of AI, fundamental models, principles of operation of large language models and NVIDIA's NeMo framework for effective training and implementation of AI models. The challenges faced by companies in creating generative AI applications were discussed, and solutions were proposed through the NVIDIA AI platform, which includes tools for data processing, model training, configuration and optimization for output. The performance of the NeMo framework in training, the role of data curation for model efficiency, and the benefits of minimal code changes to take advantage of NVIDIA's accelerated computing capabilities were emphasized.. Attention was also focused on the democratization of data through open source software, the RAPIDS suite of tools for accelerated data processing, and the benefits of using NVIDIA's GPU-accelerated libraries and tools for machine learning, communications analytics, and other AI applications..

Conclusion

- NVIDIA solutions for working with large language models (LLM) were considered. A brief guide on how to use these solutions is provided. The workshop participants thus got the opportunity to use powerful tools for LLM.

Relevance of the event:

- LLM is a trending and relevant area of AI. Tools for working with LLM were considered. Also very relevant is the task of accelerating models using parallelism, the types of which were discussed at the workshop.

Influence: The workshop participants got a clear understanding of the process of accelerating the work of models using GPU, various parallelization techniques, as well as the possibility of using powerful LLM tools.